通过 Kubeadm 安装 K8S 与高可用

文章目录

!版权声明:本博客内容均为原创,每篇博文作为知识积累,写博不易,转载请注明出处。

环境介绍:

- CentOS: 7.6

- Docker: 18.06.1-ce

- Kubernetes: 1.13.4

- Kuberadm: 1.13.4

- Kuberlet: 1.13.4

- Kuberctl: 1.13.4

部署介绍:

创建高可用首先先有一个 Master 节点,然后再让其他服务器加入组成三个 Master 节点高可用,然后再讲工作节点 Node 加入。下面将描述每个节点要执行的步骤:

- Master01: 二、三、四、五、六、七、八、九、十一

- Master02、Master03: 二、三、五、六、四、九

- node01、node02: 二、五、六、九

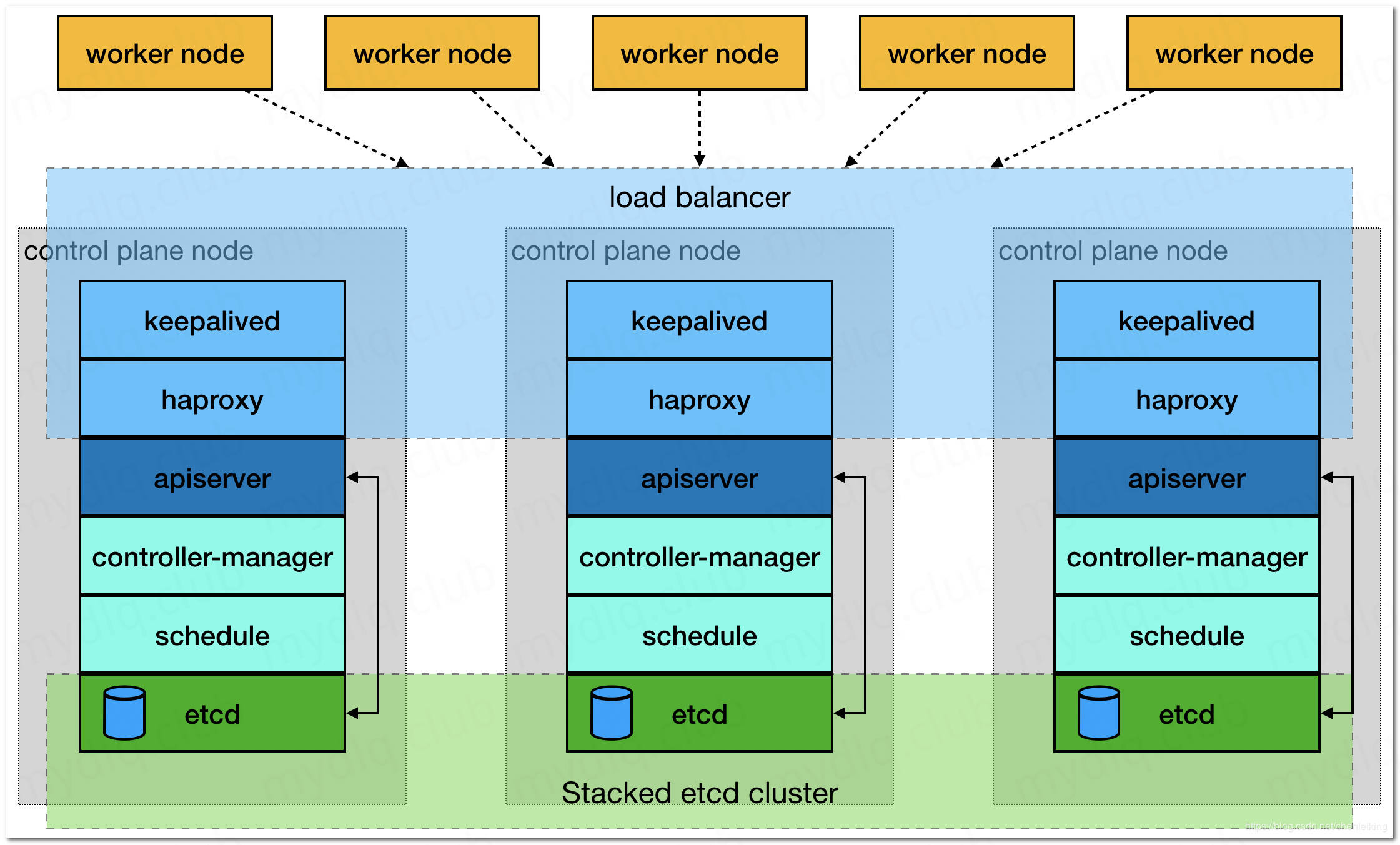

集群架构:

一、kuberadm 简介

Kuberadm 作用

Kubeadm 是一个工具,它提供了 kubeadm init 以及 kubeadm join 这两个命令作为快速创建 kubernetes 集群的最佳实践。

kubeadm 通过执行必要的操作来启动和运行一个最小可用的集群。它被故意设计为只关心启动集群,而不是之前的节点准备工作。同样的,诸如安装各种各样值得拥有的插件,例如 Kubernetes Dashboard、监控解决方案以及特定云提供商的插件,这些都不在它负责的范围。

相反,我们期望由一个基于 kubeadm 从更高层设计的更加合适的工具来做这些事情;并且,理想情况下,使用 kubeadm 作为所有部署的基础将会使得创建一个符合期望的集群变得容易。

Kuberadm 功能

- kubeadm init: 启动一个 Kubernetes 主节点

- kubeadm join: 启动一个 Kubernetes 工作节点并且将其加入到集群

- kubeadm upgrade: 更新一个 Kubernetes 集群到新版本

- kubeadm config: 如果使用 v1.7.x 或者更低版本的 kubeadm 初始化集群,您需要对集群做一些配置以便使用 kubeadm upgrade 命令

- kubeadm token: 管理 kubeadm join 使用的令牌

- kubeadm reset: 还原 kubeadm init 或者 kubeadm join 对主机所做的任何更改

- kubeadm version: 打印 kubeadm 版本

- kubeadm alpha: 预览一组可用的新功能以便从社区搜集反馈

功能版本

| Area | Maturity Level |

|---|---|

| Command line UX | GA |

| Implementation | GA |

| Config file API | beta |

| CoreDNS | GA |

| kubeadm alpha subcommands | alpha |

| High availability | alpha |

| DynamicKubeletConfig | alpha |

| Self-hosting | alpha |

二、前期准备

1、虚拟机分配说明

| 地址 | 主机名 | 内存&CPU | 角色 |

|---|---|---|---|

| 192.168.2.10 | --- | --- | vip |

| 192.168.2.11 | k8s-master-01 | 2C & 2G | master |

| 192.168.2.12 | k8s-master-02 | 2C & 2G | master |

| 192.168.2.13 | k8s-master-03 | 2C & 2G | master |

| 192.168.2.21 | k8s-node-01 | 2c & 4G | node |

| 192.168.2.22 | k8s-node-02 | 2c & 4G | node |

2、各个节点端口占用

- Master 节点

| 规则 | 方向 | 端口范围 | 作用 | 使用者 |

|---|---|---|---|---|

| TCP | Inbound | 6443* | Kubernetes API | server All |

| TCP | Inbound | 2379-2380 | etcd server | client API kube-apiserver, etcd |

| TCP | Inbound | 10250 | Kubelet API | Self, Control plane |

| TCP | Inbound | 10251 | kube-scheduler | Self |

| TCP | Inbound | 10252 | kube-controller-manager | Sel |

- node 节点

| 规则 | 方向 | 端口范围 | 作用 | 使用者 |

|---|---|---|---|---|

| TCP | Inbound | 10250 | Kubelet API | Self, Control plane |

| TCP | Inbound | 30000-32767 | NodePort Services** | All |

3、基础环境设置

Kubernetes 需要一定的系统环境来保证正常运行,如各个节点时间同步,主机名称解析,关闭防火墙等等。

主机名称解析

修改hosts

分别进入不同服务器,进入 /etc/hosts 进行编辑

1vim /etc/hosts

加入下面内容:

1192.168.2.10 master.k8s.io k8s-vip

2192.168.2.11 master01.k8s.io k8s-master-01

3192.168.2.12 master02.k8s.io k8s-master-02

4192.168.2.13 master03.k8s.io k8s-master-03

5192.168.2.21 node01.k8s.io k8s-node-01

6192.168.2.22 node02.k8s.io k8s-node-02

修改hostname

分别进入不同的服务器,修改 hostname 名称

1# 修改 192.168.2.11 服务器

2hostnamectl set-hostname k8s-master-01

3# 修改 192.168.2.12 服务器

4hostnamectl set-hostname k8s-master-02

5# 修改 192.168.2.13 服务器

6hostnamectl set-hostname k8s-master-03

7

8# 修改 192.168.2.21 服务器

9hostnamectl set-hostname k8s-node-01

10# 修改 192.168.2.22 服务器

11hostnamectl set-hostname k8s-node-02

主机时间同步

将各个服务器的时间同步,并设置开机启动同步时间服务

1systemctl start chronyd.service

2systemctl enable chronyd.service

关闭防火墙服务

1systemctl stop firewalld

2systemctl disable firewalld

关闭并禁用SELinux

1# 关闭selinux

2setenforce 0

3

4# 编辑/etc/sysconfig selinux 文件,以彻底禁用 SELinux

5sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

6

7# 查看selinux状态

8getenforce

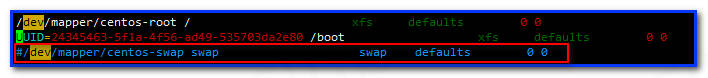

禁用 Swap 设备

Kubeadm 默认会预先检当前主机是否禁用了 Swap 设备,如果启动了 Swap 将导致安装不能正常进行,所以需要禁用所有的 Swap 设备。

1# 关闭当前已启用的所有 Swap 设备

2swapoff -a && sysctl -w vm.swappiness=0

1# 编辑 fstab 配置文件,注释掉标识为 Swap 设备的所有行

2vi /etc/fstab

设置系统参数

设置允许路由转发,不对 bridge 的数据进行处理,这里创建 /etc/sysctl.d/k8s.conf 文件,在里面加入对应配置。

1vim /etc/sysctl.d/k8s.conf

加入下面内容:

1net.ipv4.ip_forward = 1

2net.bridge.bridge-nf-call-ip6tables = 1

3net.bridge.bridge-nf-call-iptables = 1

挂载br_netfilter

1modprobe br_netfilter

使配置生效

1sysctl -p /etc/sysctl.d/k8s.conf

sysctl命令:用于运行时配置内核参数

查看是否生成相关文件

1ls /proc/sys/net/bridge

资源配置文件

/etc/security/limits.conf 是 Linux 资源使用配置文件,用来限制用户对系统资源的使用

1echo "* soft nofile 65536" >> /etc/security/limits.conf

2echo "* hard nofile 65536" >> /etc/security/limits.conf

3echo "* soft nproc 65536" >> /etc/security/limits.conf

4echo "* hard nproc 65536" >> /etc/security/limits.conf

5echo "* soft memlock unlimited" >> /etc/security/limits.conf

6echo "* hard memlock unlimited" >> /etc/security/limits.conf

安装依赖包以及相关工具

1yum install -y epel-release

1yum install -y yum-utils device-mapper-persistent-data lvm2 net-tools conntrack-tools wget vim ntpdate libseccomp libtool-ltdl

三、安装Keepalived

- keepalived 介绍: 是集群管理中保证集群高可用的一个服务软件,其功能类似于heartbeat,用来防止单点故障

- Keepalived 作用: 为haproxy提供vip(192.168.2.10)在三个haproxy实例之间提供主备,降低当其中一个haproxy失效的时对服务的影响。

1、yum安装Keepalived

1# 安装keepalived

2yum install -y keepalived

2、配置Keepalived

1cat <<EOF > /etc/keepalived/keepalived.conf

2! Configuration File for keepalived

3

4# 主要是配置故障发生时的通知对象以及机器标识。

5global_defs {

6 # 标识本节点的字条串,通常为 hostname,但不一定非得是 hostname。故障发生时,邮件通知会用到。

7 router_id LVS_k8s

8}

9

10# 用来做健康检查的,当时检查失败时会将 vrrp_instance 的 priority 减少相应的值。

11vrrp_script check_haproxy {

12 script "killall -0 haproxy" #根据进程名称检测进程是否存活

13 interval 3

14 weight -2

15 fall 10

16 rise 2

17}

18

19# rp_instance用来定义对外提供服务的 VIP 区域及其相关属性。

20vrrp_instance VI_1 {

21 state MASTER #当前节点为MASTER,其他两个节点设置为BACKUP

22 interface ens33 #改为自己的网卡

23 virtual_router_id 51

24 priority 250

25 advert_int 1

26 authentication {

27 auth_type PASS

28 auth_pass 35f18af7190d51c9f7f78f37300a0cbd

29 }

30 virtual_ipaddress {

31 192.168.2.10 #虚拟ip,即VIP

32 }

33 track_script {

34 check_haproxy

35 }

36

37}

38EOF

当前节点的配置中 state 配置为 MASTER,其它两个节点设置为 BACKUP

配置说明:

- virtual_ipaddress: vip

- track_script: 执行上面定义好的检测的script

- interface: 节点固有IP(非VIP)的网卡,用来发VRRP包。

- virtual_router_id: 取值在0-255之间,用来区分多个instance的VRRP组播

- advert_int: 发VRRP包的时间间隔,即多久进行一次master选举(可以认为是健康查检时间间隔)。

- authentication: 认证区域,认证类型有PASS和HA(IPSEC),推荐使用PASS(密码只识别前8位)。

- state: 可以是MASTER或BACKUP,不过当其他节点keepalived启动时会将priority比较大的节点选举为MASTER,因此该项其实没有实质用途。

- priority: 用来选举master的,要成为master,那么这个选项的值最好高于其他机器50个点,该项取值范围是1-255(在此范围之外会被识别成默认值100)。

3、启动Keepalived

1# 设置开机启动

2systemctl enable keepalived

3# 启动keepalived

4systemctl start keepalived

5# 查看启动状态

6systemctl status keepalived

4、查看网络状态

kepplived 配置中 state 为 MASTER 的节点启动后,查看网络状态,可以看到虚拟IP已经加入到绑定的网卡中

1ip address show ens33

当关掉当前节点的keeplived服务后将进行虚拟IP转移,将会推选state 为 BACKUP 的节点的某一节点为新的MASTER,可以在那台节点上查看网卡,将会查看到虚拟IP

四、安装haproxy

此处的haproxy为apiserver提供反向代理,haproxy将所有请求轮询转发到每个master节点上。相对于仅仅使用keepalived主备模式仅单个master节点承载流量,这种方式更加合理、健壮。

1、yum安装haproxy

1yum install -y haproxy

2、配置haproxy

1cat > /etc/haproxy/haproxy.cfg << EOF

2#---------------------------------------------------------------------

3# Global settings

4#---------------------------------------------------------------------

5global

6 # to have these messages end up in /var/log/haproxy.log you will

7 # need to:

8 # 1) configure syslog to accept network log events. This is done

9 # by adding the '-r' option to the SYSLOGD_OPTIONS in

10 # /etc/sysconfig/syslog

11 # 2) configure local2 events to go to the /var/log/haproxy.log

12 # file. A line like the following can be added to

13 # /etc/sysconfig/syslog

14 #

15 # local2.* /var/log/haproxy.log

16 #

17 log 127.0.0.1 local2

18

19 chroot /var/lib/haproxy

20 pidfile /var/run/haproxy.pid

21 maxconn 4000

22 user haproxy

23 group haproxy

24 daemon

25

26 # turn on stats unix socket

27 stats socket /var/lib/haproxy/stats

28#---------------------------------------------------------------------

29# common defaults that all the 'listen' and 'backend' sections will

30# use if not designated in their block

31#---------------------------------------------------------------------

32defaults

33 mode http

34 log global

35 option httplog

36 option dontlognull

37 option http-server-close

38 option forwardfor except 127.0.0.0/8

39 option redispatch

40 retries 3

41 timeout http-request 10s

42 timeout queue 1m

43 timeout connect 10s

44 timeout client 1m

45 timeout server 1m

46 timeout http-keep-alive 10s

47 timeout check 10s

48 maxconn 3000

49#---------------------------------------------------------------------

50# kubernetes apiserver frontend which proxys to the backends

51#---------------------------------------------------------------------

52frontend kubernetes-apiserver

53 mode tcp

54 bind *:16443

55 option tcplog

56 default_backend kubernetes-apiserver

57#---------------------------------------------------------------------

58# round robin balancing between the various backends

59#---------------------------------------------------------------------

60backend kubernetes-apiserver

61 mode tcp

62 balance roundrobin

63 server master01.k8s.io 192.168.2.11:6443 check

64 server master02.k8s.io 192.168.2.12:6443 check

65 server master03.k8s.io 192.168.2.13:6443 check

66#---------------------------------------------------------------------

67# collection haproxy statistics message

68#---------------------------------------------------------------------

69listen stats

70 bind *:1080

71 stats auth admin:awesomePassword

72 stats refresh 5s

73 stats realm HAProxy\ Statistics

74 stats uri /admin?stats

75EOF

haproxy配置在其他master节点上(192.168.2.12和192.168.2.13)相同

3、启动并检测haproxy

1# 设置开机启动

2systemctl enable haproxy

3# 开启haproxy

4systemctl start haproxy

5# 查看启动状态

6systemctl status haproxy

4、检测haproxy端口

1ss -lnt | grep -E "16443|1080"

显示:

五、安装Docker (所有节点)

1、移除之前安装过的Docker

1sudo yum remove docker \

2 docker-client \

3 docker-client-latest \

4 docker-common \

5 docker-latest \

6 docker-latest-logrotate \

7 docker-logrotate \

8 docker-selinux \

9 docker-engine-selinux \

10 docker-ce-cli \

11 docker-engine

查看还有没有存在的docker组件

1rpm -qa|grep docker

有则通过命令 yum -y remove XXX 来删除,比如:

1yum remove docker-ce-cli

2、配置docker的yum源

安装 Docker 可以从官方 yum 源下载对应应用,不过由于官方下载速度比较慢,所以推荐用阿里镜像源:

1sudo yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

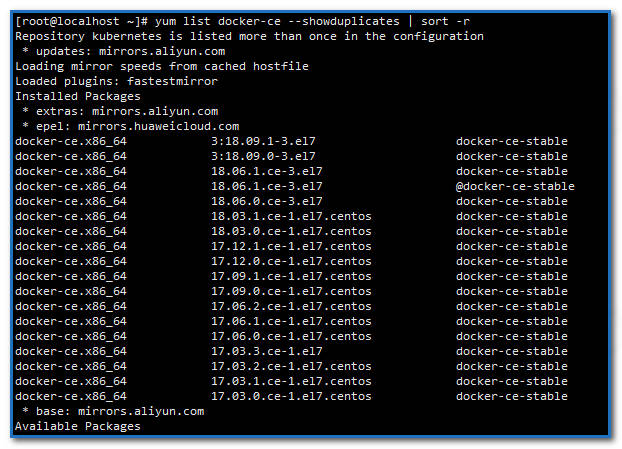

3、安装Docker:

显示docker-ce所有可安装版本:

1yum list docker-ce --showduplicates | sort -r

安装指定docker版本

1sudo yum install docker-ce-18.06.1.ce-3.el7 -y

设置镜像存储目录

找到大点的挂载的目录进行存储

1# 修改docker配置

2vi /lib/systemd/system/docker.service

3

4#找到 ExecStart 这行,王后面加上存储目录,例如这里是 --graph /apps/docker

5ExecStart=/usr/bin/docker --graph /apps/docker

启动docker并设置docker开机启动

1systemctl enable docker

2systemctl start docker

4、确认一下 iptables

确认一下iptables filter表中FOWARD链的默认策略(pllicy)为ACCEPT。

1iptables -nvL

显示:

1Chain FORWARD (policy ACCEPT 0 packets, 0 bytes)

2 pkts bytes target prot opt in out source destination

3 0 0 DOCKER-USER all -- * * 0.0.0.0/0 0.0.0.0/0

4 0 0 DOCKER-ISOLATION-STAGE-1 all -- * * 0.0.0.0/0 0.0.0.0/0

5 0 0 ACCEPT all -- * docker0 0.0.0.0/0 0.0.0.0/0 ctstate RELATED,ESTABLISHED

6 0 0 DOCKER all -- * docker0 0.0.0.0/0 0.0.0.0/0

7 0 0 ACCEPT all -- docker0 !docker0 0.0.0.0/0 0.0.0.0/0

8 0 0 ACCEPT all -- docker0 docker0 0.0.0.0/0 0.0.0.0/0

Docker从1.13版本开始调整了默认的防火墙规则,禁用了iptables filter表中FOWARD链,这样会引起Kubernetes集群中跨Node的Pod无法通信。但这里通过安装docker 1806,发现默认策略又改回了ACCEPT,这个不知道是从哪个版本改回的,因为我们线上版本使用的1706还是需要手动调整这个策略的。

六、安装kubeadm、kubelet

-

需要在每台机器上都安装以下的软件包:

- kubeadm: 用来初始化集群的指令。

- kubelet: 在集群中的每个节点上用来启动 pod 和 container 等。

- kubectl: 用来与集群通信的命令行工具。

1、配置可用的国内yum源用于安装:

1cat <<EOF > /etc/yum.repos.d/kubernetes.repo

2[kubernetes]

3name=Kubernetes

4baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

5enabled=1

6gpgcheck=0

7repo_gpgcheck=0

8gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

9EOF

2、安装kubelet

查看kubelet版本列表

1yum list kubectl --showduplicates | sort -r

安装kubelet

1yum install -y kubectl-1.13.4-0

3、安装kubelet

查看kubelet版本列表

1yum list kubelet --showduplicates | sort -r

安装kubelet

1yum install -y kubelet-1.13.4-0

启动kubelet并设置开机启动

1systemctl enable kubelet

2systemctl start kubelet

检查状态

检查状态,发现是failed状态,正常,kubelet会10秒重启一次,等初始化master节点后即可正常

1systemctl status kubelet

4、安装kubeadm

查看kubeadm版本列表

1yum list kubeadm --showduplicates | sort -r

安装kubeadm

1yum install -y kubeadm-1.13.4-0

安装 kubeadm 时候会默认安装 kubectl ,所以不需要单独安装kubectl

4、重启服务器

为了防止发生某些未知错误,这里我们重启下服务器,方便进行后续操作

1reboot

七、初始化第一个kubernetes master节点

因为需要绑定虚拟IP,所以需要首先先查看虚拟IP启动这几台master机子哪台上

1ip address show ens33

显示:

1 ens33: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

2 link/ether 00:0c:29:7e:65:b3 brd ff:ff:ff:ff:ff:ff

3 inet 192.168.2.11/24 brd 192.168.2.255 scope global noprefixroute ens33

4 valid_lft forever preferred_lft forever

5 inet 192.168.2.10/32 scope global ens33

6 valid_lft forever preferred_lft forever

可以看到 10虚拟ip 和 11的ip 在一台机子上,所以初始化kubernetes第一个master要在master01机子上进行安装

1、创建kubeadm配置的yaml文件

1cat > kubeadm-config.yaml << EOF

2apiServer:

3 certSANs:

4 - k8s-master-01

5 - k8s-master-02

6 - k8s-master-03

7 - master.k8s.io

8 - 192.168.2.10

9 - 192.168.2.11

10 - 192.168.2.12

11 - 192.168.2.13

12 - 127.0.0.1

13 extraArgs:

14 authorization-mode: Node,RBAC

15 timeoutForControlPlane: 4m0s

16apiVersion: kubeadm.k8s.io/v1beta1

17certificatesDir: /etc/kubernetes/pki

18clusterName: kubernetes

19controlPlaneEndpoint: "master.k8s.io:16443"

20controllerManager: {}

21dns:

22 type: CoreDNS

23etcd:

24 local:

25 dataDir: /var/lib/etcd

26imageRepository: registry.aliyuncs.com/google_containers

27kind: ClusterConfiguration

28kubernetesVersion: v1.13.4

29networking:

30 dnsDomain: cluster.local

31 podSubnet: 10.20.0.0/16

32 serviceSubnet: 10.10.0.0/16

33scheduler: {}

34EOF

以下两个地方设置:

- certSANs: 虚拟ip地址(为了安全起见,把所有集群地址都加上)

- controlPlaneEndpoint: 虚拟IP:监控端口号

配置说明:

- imageRepository: registry.aliyuncs.com/google_containers (使用阿里云镜像仓库)

- podSubnet: 10.20.0.0/16 (pod地址池)

- serviceSubnet: 10.10.0.0/16

2、初始化第一个master节点

1kubeadm init --config kubeadm-config.yaml

日志:

1Your Kubernetes master has initialized successfully!

2

3To start using your cluster, you need to run the following as a regular user:

4

5 mkdir -p $HOME/.kube

6 sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

7 sudo chown $(id -u):$(id -g) $HOME/.kube/config

8

9You should now deploy a pod network to the cluster.

10Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

11 https://kubernetes.io/docs/concepts/cluster-administration/addons/

12

13You can now join any number of machines by running the following on each node

14as root:

15

16 kubeadm join master.k8s.io:16443 --token dm3cw1.kw4hq84ie1376hji --discovery-token-ca-cert-hash sha256:f079b624773145ba714b56e177f52143f90f75a1dcebabda6538a49e224d4009

在此处看日志可以知道,通过

1kubeadm join master.k8s.io:16443 --token dm3cw1.kw4hq84ie1376hji --discovery-token-ca-cert-hash sha256:f079b624773145ba714b56e177f52143f90f75a1dcebabda6538a49e224d4009

来让节点加入集群

3、配置kubectl环境变量

配置环境变量

1mkdir -p $HOME/.kube

2sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

3sudo chown $(id -u):$(id -g) $HOME/.kube/config

4、查看组件状态

1kubectl get cs

显示:

1NAME STATUS MESSAGE ERROR

2controller-manager Healthy ok

3scheduler Healthy ok

4etcd-0 Healthy {"health": "true"}

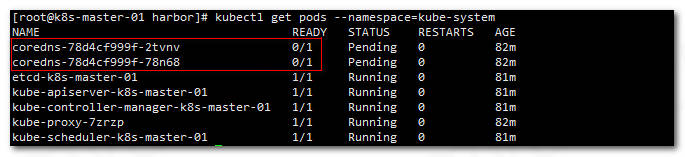

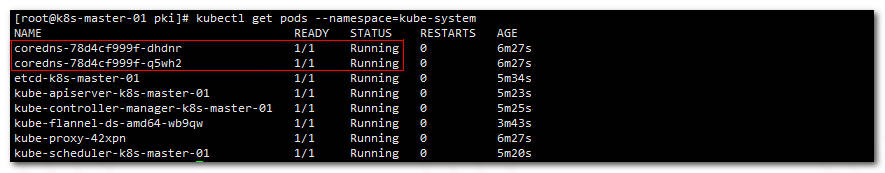

查看pod状态

1kubectl get pods --namespace=kube-system

显示:

可以看到coredns没有启动,这是由于还没有配置网络插件,接下来配置下后再重新查看启动状态

八、安装网络插件

1、配置flannel插件的yaml文件

1cat > kube-flannel.yaml << EOF

2---

3kind: ClusterRole

4apiVersion: rbac.authorization.k8s.io/v1beta1

5metadata:

6 name: flannel

7rules:

8 - apiGroups:

9 - ""

10 resources:

11 - pods

12 verbs:

13 - get

14 - apiGroups:

15 - ""

16 resources:

17 - nodes

18 verbs:

19 - list

20 - watch

21 - apiGroups:

22 - ""

23 resources:

24 - nodes/status

25 verbs:

26 - patch

27---

28kind: ClusterRoleBinding

29apiVersion: rbac.authorization.k8s.io/v1beta1

30metadata:

31 name: flannel

32roleRef:

33 apiGroup: rbac.authorization.k8s.io

34 kind: ClusterRole

35 name: flannel

36subjects:

37- kind: ServiceAccount

38 name: flannel

39 namespace: kube-system

40---

41apiVersion: v1

42kind: ServiceAccount

43metadata:

44 name: flannel

45 namespace: kube-system

46---

47kind: ConfigMap

48apiVersion: v1

49metadata:

50 name: kube-flannel-cfg

51 namespace: kube-system

52 labels:

53 tier: node

54 app: flannel

55data:

56 cni-conf.json: |

57 {

58 "name": "cbr0",

59 "plugins": [

60 {

61 "type": "flannel",

62 "delegate": {

63 "hairpinMode": true,

64 "isDefaultGateway": true

65 }

66 },

67 {

68 "type": "portmap",

69 "capabilities": {

70 "portMappings": true

71 }

72 }

73 ]

74 }

75 net-conf.json: |

76 {

77 "Network": "10.20.0.0/16",

78 "Backend": {

79 "Type": "vxlan"

80 }

81 }

82---

83apiVersion: extensions/v1beta1

84kind: DaemonSet

85metadata:

86 name: kube-flannel-ds-amd64

87 namespace: kube-system

88 labels:

89 tier: node

90 app: flannel

91spec:

92 template:

93 metadata:

94 labels:

95 tier: node

96 app: flannel

97 spec:

98 hostNetwork: true

99 nodeSelector:

100 beta.kubernetes.io/arch: amd64

101 tolerations:

102 - operator: Exists

103 effect: NoSchedule

104 serviceAccountName: flannel

105 initContainers:

106 - name: install-cni

107 image: registry.cn-shenzhen.aliyuncs.com/cp_m/flannel:v0.10.0-amd64

108 command:

109 - cp

110 args:

111 - -f

112 - /etc/kube-flannel/cni-conf.json

113 - /etc/cni/net.d/10-flannel.conflist

114 volumeMounts:

115 - name: cni

116 mountPath: /etc/cni/net.d

117 - name: flannel-cfg

118 mountPath: /etc/kube-flannel/

119 containers:

120 - name: kube-flannel

121 image: registry.cn-shenzhen.aliyuncs.com/cp_m/flannel:v0.10.0-amd64

122 command:

123 - /opt/bin/flanneld

124 args:

125 - --ip-masq

126 - --kube-subnet-mgr

127 resources:

128 requests:

129 cpu: "100m"

130 memory: "50Mi"

131 limits:

132 cpu: "100m"

133 memory: "50Mi"

134 securityContext:

135 privileged: true

136 env:

137 - name: POD_NAME

138 valueFrom:

139 fieldRef:

140 fieldPath: metadata.name

141 - name: POD_NAMESPACE

142 valueFrom:

143 fieldRef:

144 fieldPath: metadata.namespace

145 volumeMounts:

146 - name: run

147 mountPath: /run

148 - name: flannel-cfg

149 mountPath: /etc/kube-flannel/

150 volumes:

151 - name: run

152 hostPath:

153 path: /run

154 - name: cni

155 hostPath:

156 path: /etc/cni/net.d

157 - name: flannel-cfg

158 configMap:

159 name: kube-flannel-cfg

160EOF

"Network": "10.20.0.0/16"要和kubeadm-config.yaml配置文件中podSubnet: 10.20.0.0/16相同

2、创建flanner相关role和pod

1kubectl apply -f kube-flannel.yaml

等待一会时间,再次查看各个pods的状态

1kubectl get pods --namespace=kube-system

显示:

可以看到coredns已经启动

九、加入集群

1、Master加入集群构成高可用

复制秘钥到各个节点

在master01 服务器上执行下面命令,将kubernetes相关文件复制到 master02、master03

如果其他节点为初始化第一个master节点,则将该节点的配置文件复制到其余两个主节点,例如master03为第一个master节点,则将它的k8s配置复制到master02和master01。

- 复制文件到 master02

1ssh root@master02.k8s.io mkdir -p /etc/kubernetes/pki/etcd

2scp /etc/kubernetes/admin.conf root@master02.k8s.io:/etc/kubernetes

3scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} root@master02.k8s.io:/etc/kubernetes/pki

4scp /etc/kubernetes/pki/etcd/ca.* root@master02.k8s.io:/etc/kubernetes/pki/etcd

- 复制文件到 master03

1ssh root@master03.k8s.io mkdir -p /etc/kubernetes/pki/etcd

2scp /etc/kubernetes/admin.conf root@master03.k8s.io:/etc/kubernetes

3scp /etc/kubernetes/pki/{ca.*,sa.*,front-proxy-ca.*} root@master03.k8s.io:/etc/kubernetes/pki

4scp /etc/kubernetes/pki/etcd/ca.* root@master03.k8s.io:/etc/kubernetes/pki/etcd

- master节点加入集群

master02 和 master03 服务器上都执行加入集群操作

1kubeadm join master.k8s.io:16443 --token dm3cw1.kw4hq84ie1376hji --discovery-token-ca-cert-hash sha256:f079b624773145ba714b56e177f52143f90f75a1dcebabda6538a49e224d4009 --experimental-control-plane

如果加入失败想重新尝试,请输入 kubeadm reset 命令清除之前的设置,重新执行从“复制秘钥”和“加入集群”这两步

显示安装过程:

1......

2This node has joined the cluster and a new control plane instance was created:

3

4* Certificate signing request was sent to apiserver and approval was received.

5* The Kubelet was informed of the new secure connection details.

6* Master label and taint were applied to the new node.

7* The Kubernetes control plane instances scaled up.

8* A new etcd member was added to the local/stacked etcd cluster.

9

10To start administering your cluster from this node, you need to run the following as a regular user:

11

12 mkdir -p $HOME/.kube

13 sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

14 sudo chown $(id -u):$(id -g) $HOME/.kube/config

15

16Run 'kubectl get nodes' to see this node join the cluster.

- 配置kubectl环境变量

1mkdir -p $HOME/.kube

2sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

3sudo chown $(id -u):$(id -g) $HOME/.kube/config

2、node节点加入集群

除了让master节点加入集群组成高可用外,slave节点也要加入集群中。

这里将k8s-node-01、k8s-node-02加入集群,进行工作

输入初始化k8s master时候提示的加入命令,如下:

1kubeadm join master.k8s.io:16443 --token dm3cw1.kw4hq84ie1376hji --discovery-token-ca-cert-hash sha256:f079b624773145ba714b56e177f52143f90f75a1dcebabda6538a49e224d4009

3、如果忘记加入集群的token和sha256 (如正常则跳过)

- 显示获取token列表

1kubeadm token list

默认情况下 Token 过期是时间是24小时,如果 Token 过期以后,可以输入以下命令,生成新的 Token

1kubeadm token create

- 获取ca证书sha256编码hash值

1openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | openssl dgst -sha256 -hex | sed 's/^.* //'

- 拼接命令

1kubeadm join master.k8s.io:16443 --token 882ik4.9ib2kb0eftvuhb58 --discovery-token-ca-cert-hash sha256:0b1a836894d930c8558b350feeac8210c85c9d35b6d91fde202b870f3244016a

如果是master加入,请在最后面加上 --experimental-control-plane 这个参数

4、查看各个节点加入集群情况

1kubectl get nodes -o wide

显示:

1NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

2k8s-master-01 Ready master 12m v1.13.4 192.168.2.11 <none> CentOS Linux 7 (Core) 3.10.0-957.1.3.el7.x86_64 docker://18.6.1

3k8s-master-02 Ready master 10m v1.13.4 192.168.2.12 <none> CentOS Linux 7 (Core) 3.10.0-957.1.3.el7.x86_64 docker://18.6.1

4k8s-master-03 Ready master 38m v1.13.4 192.168.2.13 <none> CentOS Linux 7 (Core) 3.10.0-957.1.3.el7.x86_64 docker://18.6.1

5k8s-node-01 Ready <none> 68s v1.13.4 192.168.2.21 <none> CentOS Linux 7 (Core) 3.10.0-957.1.3.el7.x86_64 docker://18.6.1

6k8s-node-02 Ready <none> 61s v1.13.4 192.168.2.22 <none> CentOS Linux 7 (Core) 3.10.0-957.1.3.el7.x86_64 docker://18.6.1

十、从集群中删除 Node

- Master节点:

1kubectl drain <node name> --delete-local-data --force --ignore-daemonsets

2kubectl delete node <node name>

- slave节点

1kubeadm reset

十一、配置dashboard

这个在一个服务器上部署,其他服务器会复制这个部署的pod,所以这里在master01服务器上部署 dashboard

1、创建 dashboard.yaml 并启动

1# ------------------- Dashboard Secret ------------------- #

2apiVersion: v1

3kind: Secret

4metadata:

5 labels:

6 k8s-app: kubernetes-dashboard

7 name: kubernetes-dashboard-certs

8 namespace: kube-system

9type: Opaque

10

11---

12# ------------------- Dashboard Service Account ------------------- #

13

14apiVersion: v1

15kind: ServiceAccount

16metadata:

17 labels:

18 k8s-app: kubernetes-dashboard

19 name: kubernetes-dashboard

20 namespace: kube-system

21

22---

23# ------------------- Dashboard Role & Role Binding ------------------- #

24

25kind: Role

26apiVersion: rbac.authorization.k8s.io/v1

27metadata:

28 name: kubernetes-dashboard-minimal

29 namespace: kube-system

30rules:

31 # Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.

32- apiGroups: [""]

33 resources: ["secrets"]

34 verbs: ["create"]

35 # Allow Dashboard to create 'kubernetes-dashboard-settings' config map.

36- apiGroups: [""]

37 resources: ["configmaps"]

38 verbs: ["create"]

39 # Allow Dashboard to get, update and delete Dashboard exclusive secrets.

40- apiGroups: [""]

41 resources: ["secrets"]

42 resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]

43 verbs: ["get", "update", "delete"]

44 # Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.

45- apiGroups: [""]

46 resources: ["configmaps"]

47 resourceNames: ["kubernetes-dashboard-settings"]

48 verbs: ["get", "update"]

49 # Allow Dashboard to get metrics from heapster.

50- apiGroups: [""]

51 resources: ["services"]

52 resourceNames: ["heapster"]

53 verbs: ["proxy"]

54- apiGroups: [""]

55 resources: ["services/proxy"]

56 resourceNames: ["heapster", "http:heapster:", "https:heapster:"]

57 verbs: ["get"]

58

59---

60

61apiVersion: rbac.authorization.k8s.io/v1

62kind: RoleBinding

63metadata:

64 name: kubernetes-dashboard-minimal

65 namespace: kube-system

66roleRef:

67 apiGroup: rbac.authorization.k8s.io

68 kind: Role

69 name: kubernetes-dashboard-minimal

70subjects:

71- kind: ServiceAccount

72 name: kubernetes-dashboard

73 namespace: kube-system

74

75---

76

77# ------------------- Dashboard Deployment ------------------- #

78# 1.修改了镜像仓库位置,编辑成自己的镜像仓库

79# 2.变更了镜像拉去策略imagePullPolicy: IfNotPresent

80kind: Deployment

81apiVersion: apps/v1

82metadata:

83 labels:

84 k8s-app: kubernetes-dashboard

85 name: kubernetes-dashboard

86 namespace: kube-system

87spec:

88 replicas: 1

89 revisionHistoryLimit: 10

90 selector:

91 matchLabels:

92 k8s-app: kubernetes-dashboard

93 template:

94 metadata:

95 labels:

96 k8s-app: kubernetes-dashboard

97 spec:

98 containers:

99 - name: kubernetes-dashboard

100 image: registry.cn-hangzhou.aliyuncs.com/google_containers/kubernetes-dashboard-amd64:v1.10.1

101 imagePullPolicy: IfNotPresent

102 ports:

103 - containerPort: 8443

104 protocol: TCP

105 args:

106 - --auto-generate-certificates

107 # Uncomment the following line to manually specify Kubernetes API server Host

108 # If not specified, Dashboard will attempt to auto discover the API server and connect

109 # to it. Uncomment only if the default does not work.

110 # - --apiserver-host=http://my-address:port

111 volumeMounts:

112 - name: kubernetes-dashboard-certs

113 mountPath: /certs

114 # Create on-disk volume to store exec logs

115 - mountPath: /tmp

116 name: tmp-volume

117 livenessProbe:

118 httpGet:

119 scheme: HTTPS

120 path: /

121 port: 8443

122 initialDelaySeconds: 30

123 timeoutSeconds: 30

124 volumes:

125 - name: kubernetes-dashboard-certs

126 secret:

127 secretName: kubernetes-dashboard-certs

128 - name: tmp-volume

129 emptyDir: {}

130 serviceAccountName: kubernetes-dashboard

131 # Comment the following tolerations if Dashboard must not be deployed on master

132 tolerations:

133 - key: node-role.kubernetes.io/master

134 effect: NoSchedule

135---

136# ------------------- Dashboard Service ------------------- #

137# 增加了nodePort,使得能够访问,改变默认的type类型ClusterIP,变为NodePort

138# 如果不配置的话默认只能集群内访问

139kind: Service

140apiVersion: v1

141metadata:

142 labels:

143 k8s-app: kubernetes-dashboard

144 name: kubernetes-dashboard

145 namespace: kube-system

146spec:

147 type: NodePort

148 ports:

149 - port: 443

150 targetPort: 8443

151 nodePort: 30001

152 selector:

153 k8s-app: kubernetes-dashboard

运行 dashboard

1kubectl create -f kubernetes-dashboard.yaml

2、Dashboard 创建 ServiceAccount 并绑定 Admin 角色

1kind: ClusterRoleBinding

2apiVersion: rbac.authorization.k8s.io/v1beta1

3metadata:

4 name: admin

5 annotations:

6 rbac.authorization.kubernetes.io/autoupdate: "true"

7roleRef:

8 kind: ClusterRole

9 name: cluster-admin

10 apiGroup: rbac.authorization.k8s.io

11subjects:

12- kind: ServiceAccount

13 name: admin

14 namespace: kube-system

15---

16apiVersion: v1

17kind: ServiceAccount

18metadata:

19 name: admin

20 namespace: kube-system

21 labels:

22 kubernetes.io/cluster-service: "true"

23 addonmanager.kubernetes.io/mode: Reconcile

运行dashboard的用户和角色绑定

1kubectl create -f dashboard-user-role.yaml

获取登陆token

1kubectl describe secret/$(kubectl get secret -n kube-system |grep admin|awk '{print $1}') -n kube-system

显示:

1[root@k8s-master-01 local]# kubectl describe secret/$(kubectl get secret -nkube-system |grep admin|awk '{print $1}') -nkube-system

2Name: admin-token-2mfdz

3Namespace: kube-system

4Labels: <none>

5Annotations: kubernetes.io/service-account.name: admin

6 kubernetes.io/service-account.uid: 74efd994-38d8-11e9-8740-000c299624e4

7

8Type: kubernetes.io/service-account-token

9

10Data

11====

12ca.crt: 1025 bytes

13namespace: 11 bytes

token:

eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi10b2tlbi1qdjd4ayIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJhZG1pbiIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImM4ZTMxYzk0LTQ2MWEtMTFlOS1iY2M5LTAwMGMyOTEzYzUxZCIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTphZG1pbiJ9.TNw1iFEsZmJsVG4cki8iLtEoiY1pjpnOYm8ZIFjctpBdTOw6kUMvv2b2B2BJ_5rFle31gqGAZBIRyYj9LPAs06qT5uVP_l9o7IyFX4HToBF3veiun4e71822eQRUsgqiPh5uSjKXEkf9yGq9ujiCdtzFxnp3Pnpeuge73syuwd7J6F0-dJAp3b48MLZ1JJwEo6CTCMhm9buysycUYTbT_mUDQMNrHVH0868CdN_H8azA4PdLLLrFfTiVgoGu4c3sG5rgh9kKFqZA6dzV0Kq10W5JJwJRM1808ybLHyV9jfKN8N2_lZ7ehE6PbPU0cV-PyP74iA-HrzFW1yVwSLPVYA

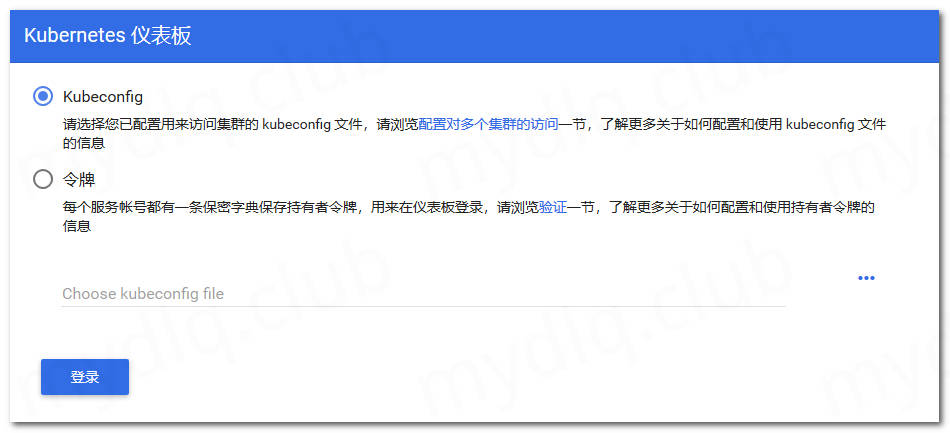

3、运行dashboard并登陆

输入地址:https://192.168.2.10:30001 进入 dashboard 界面

这里输入上面获取的 token 进入 dashboard

问题

1、Master不会参与负载工作

Master不会参与负载工作,如何让其参加,这里需要了解traint

查看traint

1# 查看全部节点是否能被安排工作

2kubectl describe nodes | grep -E '(Roles|Taints)'

删除traint

1# 所有node都可以调度

2kubectl taint nodes --all node-role.kubernetes.io/master-

3# 指定node可以调度

4kubectl taint nodes k8s-master-01 node-role.kubernetes.io/master-

2、重新加入集群

有时候节点出现问题需要重新加入集群,加入前需要清除一些设置,不然可能出现某些错误,比如

1network is not ready: [runtime network not ready: NetworkReady=false reason:NetworkPluginNotReady message:docker: network plugin is not ready: cni config uninitialized]

2Back-off restarting failed container

按下面步骤执行,再执行加入集群命令即可

1#重置kubernetes服务,重置网络。删除网络配置,link

2kubeadm reset

3

4#停止docker

5systemctl stop docker

6

7#重置cni

8rm -rf /var/lib/cni/

9rm -rf /var/lib/kubelet/*

10rm -rf /etc/cni/

11ifconfig cni0 down

12ifconfig flannel.1 down

13ifconfig docker0 down

14ip link delete cni0

15ip link delete flannel.1

16

17#重启docker

18systemctl start docker

再次加入集群

1kubeadm join cluster.kube.com:16443 --token gaeyou.k2650x660c8eb98c --discovery-token-ca-cert-hash sha256:daf4c2e0264422baa7076a2587f9224a5bd9c5667307927b0238743799dfb362

!版权声明:本博客内容均为原创,每篇博文作为知识积累,写博不易,转载请注明出处。